Building Safe Communities with AI-powered Content Moderation

Ensuring that your online community is a safe and welcoming space is crucial for maintaining a positive user experience. One way to achieve this is by implementing content moderation to filter out harmful and inappropriate text content. With the latest developments in AI and large-language models we'll explore how to implement an automated text content moderation system using JavaScript.

Solution 1: Basic moderation without AI

Step 1: Define a list of banned words and phrases

Start by defining a list of words and phrases that you want to filter out from user-generated content. These can include profanity, hate speech, or any other content that is not appropriate for your platform.

const bannedWords = [

"loser",

"idiot",

"hate",

"kill",

// Add more words/phrases as needed

];

Step 2: Implement the content moderation function

Create a function that checks user-generated content against the list of banned words and phrases.

function moderateContent(text) {

for (let word of bannedWords) {

if (text.toLowerCase().includes(word)) {

return true; // Content is not safe

}

}

return false; // Content is safe

}

Step 3: Moderating text content

Use the moderateContent function to check user-generated content before displaying it.

const textToModerate = "Hey, you're such a loser!";

if (moderateContent(textToModerate)) {

console.log("This text is not safe.");

} else {

console.log("Text is safe.");

}

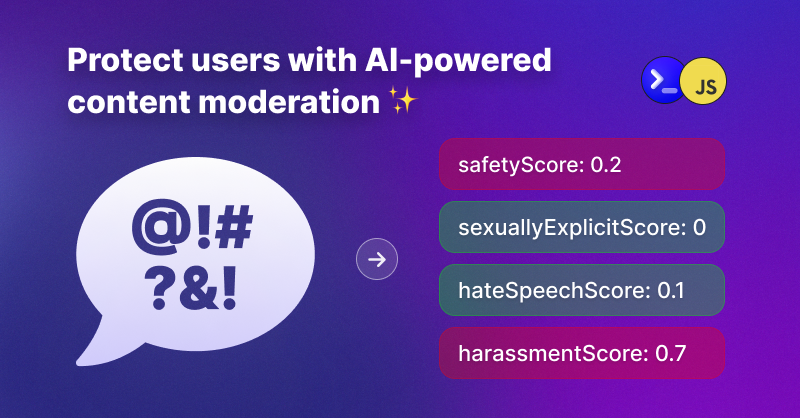

Solution 2: Use an AI-powered API ✨

While implementing a basic content moderation system within your application is possible, an AI-powered solution offers several advantages. AI-powered solutions leverage machine learning algorithms to provide superior accuracy, scalability, efficiency, and comprehensive coverage in detecting harmful content. Unlike simple word matching, AI algorithms can understand context and detect subtle nuances, making them more effective at identifying inappropriate content. Additionally, AI-powered solutions can scale seamlessly to handle large volumes of user-generated content, saving you time and effort in the long run.

Prerequisite: Sign Up for ExoAPI

Visit ExoAPI and sign up for an account to obtain your API key.

1. Install the SDK

npm install @flower-digital/exoapi-sdk

2. Import and initialize the SDK with your API key

import { ExoAPI } from "@flower-digital/exoapi-sdk";

const exoapi = new ExoAPI({ apiKey: YOUR_API_KEY });

3. Moderate text content

You can now use the contentModeration method to moderate text content:

const textToModerate = "Hey, you're such a loser!";

exoapi

.contentModeration({ text: textToModerate })

.then((result) => {

if (result.safetyScore < 0.3) {

console.log("This text is not safe.");

} else {

console.log("Text is safe.");

}

})

.catch((err) => console.error("An error occurred:", err));

Handling the response

The contentModeration method returns a JSON object containing the following fields:

safetyScore: A score between 0 and 1 expressing how safe the content is.reason: A short explanation of the text moderation analysis.hateSpeechScore: Probability between 0 and 1 of the text containing hate speech.dangerousContentScore: Probability between 0 and 1 of the text containing dangerous elements.harassmentScore: Probability between 0 and 1 of the text containing harassment.sexuallyExplicitScore: Probability between 0 and 1 of the text containing sexually explicit language.

For example with the text above you would get:

{

"safetyScore": 0.2,

"reason": "The text contains an insult and is therefore considered offensive.",

"hateSpeechScore": 0.29,

"dangerousContentScore": 0.15,

"harassmentScore": 0.64,

"sexuallyExplicitScore": 0.09

}

You can use this information to decide what is acceptable within your community and avoid false positives.

Conclusion

While it is possible to create a basic content moderation system within your application, using an AI-powered solution like ExoAPI provides the best and safest results. AI-powered solutions offer superior accuracy, scalability, efficiency, and comprehensive coverage in detecting harmful content. Prioritizing the safety and well-being of your online community is essential, and an AI-powered content moderation solution ensures that your platform remains a safe and welcoming space for all users.